This article was originally published in BDO Spotlight - June 2024

Artificial Intelligence (AI) has become a transformative force across various industries, driving innovation and efficiency. It plays a crucial role in improving efficiencies, decision-making processes, and user experiences in areas such as business, healthcare, transportation, and entertainment. However, the increasing reliance on AI underscores the critical need to ensure the trustworthiness and reliability of these systems. Establishing trust in AI systems is pivotal for their widespread adoption and implementation, and this can be accomplished through transparency, accountability, and the formulation of robust testing and auditing frameworks.

This article delves into the significance of these principles and highlights frameworks such as recent initiatives undertaken by the Singapore government.

Transparency in AI Systems

Transparency in AI systems involves making the decision-making processes of AI models understandable to stakeholders. This includes providing clear explanations of how AI algorithms arrive at their conclusions and the data used to train them. Transparency is essential for several reasons:- Building Trust: When stakeholders understand how an AI system works, they are more likely to trust its outputs.

- Identifying Bias: Transparency helps in identifying and mitigating biases in AI models, ensuring fairness.

- Facilitating Accountability: Transparent systems make it easier to hold developers and organisations accountable for AI decisions.

To begin with, transparency is a fundamental factor in cultivating trust in AI systems. Users and stakeholders require a clear understanding of how AI systems arrive at decisions and function. This encompasses creating transparency in the data used for training AI models, the algorithms utilised, and the potential biases or constraints of the system. By embracing transparency, AI developers and organisations can showcase their dedication to openness and integrity, thereby fostering trust among users and stakeholders.

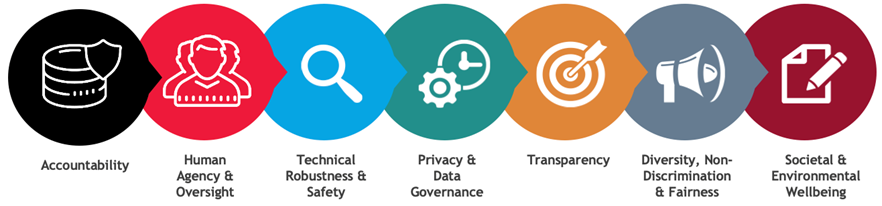

Figure 1: Requirements of AI Transparency

Figure 1: Requirements of AI Transparency

Case Study: Singapore PDPC AI Governance Framework

The Singapore Personal Data Protection Commission (PDPC) AI Governance Framework is a pioneering initiative aimed at promoting transparency and accountability in AI systems. It provides practical guidance to organisations on how to deploy AI responsibly. Key components of the framework include:

- Internal Governance Structures: Establishing clear roles and responsibilities for AI governance within organisations.

- Risk Management: Identifying and mitigating risks associated with AI deployment.

- Operationalising AI Ethics: Integrating ethical considerations into the development and deployment of AI systems.

Perhaps most importantly, the framework emphasises the need to operationalise AI ethics. This means integrating ethical considerations into every stage of AI development and deployment, from data collection to model deployment. By doing so, organisations can ensure that AI systems are designed to promote fairness, transparency, and accountability, rather than perpetuating harmful biases or stereotypes. This requires a deep understanding of the ethical implications of AI, as well as a commitment to ongoing monitoring and evaluation.

Through its comprehensive approach to AI governance, the PDPC framework provides a roadmap for organisations seeking to deploy AI responsibly. By establishing clear governance structures, managing risks, and operationalising AI ethics, organisations can unlock the full potential of AI while minimising its risks. As the use of AI continues to grow, the PDPC framework is poised to play a critical role in shaping the future of responsible AI development and deployment.

Accountability in AI Systems

Accountability in AI systems ensures that mechanisms are in place to hold individuals and organisations responsible for the actions and decisions made by AI. This involves:

- Clear Documentation: Maintaining detailed records of AI development processes, decision-making criteria, and data sources.

- Regulatory Compliance: Adhering to laws and regulations governing AI use.

- Responsibility Attribution: Clearly defining who is accountable for the AI system's behavior and outcomes.

- Periodical Audits: Performing periodic reviews to assess the AI system's performance, fairness, and adherence to ethical standards, allowing for the identification and correction of issues.

Accountability stands out as another pivotal facet of dependable AI systems. Organisations must assume responsibility for the outcomes of their AI systems and have mechanisms in place to address any potential issues or concerns. This involves establishing clear lines of accountability within organisations and implementing processes to rectify errors, biases, or unintended consequences of AI systems. By exhibiting accountability, organisations can instil confidence in their AI systems and demonstrate a commitment to upholding ethical standards and values. Additionally, the formulation of robust testing and auditing frameworks is imperative for ensuring the reliability and trustworthiness of AI systems. Rigorous testing of AI systems under various scenarios and conditions can help identify potential weaknesses or vulnerabilities and ensure that the systems function as intended.

| Measure | Description |

| Documentation | Keeping thorough records of AI model development and deployment |

| Regulatory Compliance | Ensuring adherence to relevant AI laws and regulations |

| Responsibility Attribution | Assigning accountability to specific individuals or teams |

| Regular Audits | Conducting periodic audits to ensure compliance and performance |

Framework Highlight: IMDA GenAI Model Governance Framework

The Infocomm Media Development Authority (IMDA) of Singapore introduced the GenAI Model Governance Framework to address accountability in AI. The framework focuses on:

- Model Lifecycle Management: Managing AI models throughout their lifecycle, from development to deployment and retirement.

- Ethical AI Development: Encouraging ethical considerations during AI development, such as fairness, transparency, and accountability.

- Continuous Monitoring: Implementing mechanisms for ongoing monitoring and evaluation of AI systems.

At its core, the framework recognises that AI models are not static entities, but rather dynamic systems that require ongoing management and oversight throughout their lifecycle. The framework's focus on Model Lifecycle Management is a critical component of this approach. It acknowledges that AI models are not one-time creations, but rather evolve over time through continuous updates, refinements, and iterations.

Through its focus on Model Lifecycle Management, Ethical AI Development, and Continuous Monitoring, the IMDA GenAI Model Governance Framework provides a comprehensive approach to addressing accountability in AI. By adopting this framework, organisations can ensure that their AI systems are developed and deployed in a responsible and trustworthy manner, and that they remain accountable to users and society over time.

Robust Testing and Auditing Frameworks

Robust testing and auditing frameworks are critical for ensuring the reliability and trustworthiness of AI systems. These frameworks involve:

- Comprehensive Testing: Conducting extensive tests to evaluate AI model performance under various conditions.

- Bias Detection: Identifying and mitigating biases in AI models through rigorous testing.

- Independent Audits: Engaging third-party auditors to review AI systems for compliance and performance.

Fiqure 2: Example of the AI Algorithm Audit

Furthermore, regular audits of AI systems can offer insights into their performance, pinpoint areas for enhancement, and ensure their continued adherence to ethical and regulatory standards over time. One strategy to foster trust in AI systems revolves around establishing industry-wide standards and best practices. By defining common guidelines for transparency, accountability, testing, and auditing, the AI community can collaborate to ensure that AI systems are developed and deployed in a responsible and trustworthy manner. This can promote a level playing field for organisations and encourage the adoption of ethical practices across the industry. Incorporating references to frameworks such as the Singapore PDPC AI Model Governance Framework, AI Verify, Project Moonshot, and the IMDA GenAI Model Governance Framework in this collaborative effort can further solidify the ethical foundations of AI development and deployment. Moreover, industry, academia, and regulatory bodies must collaborate effectively to engender trust in AI systems.

Throughout this exploration of responsible AI principles and their imperative role in developing trustworthy systems, it has become evident that ethical considerations are not mere afterthoughts but foundational elements of contemporary AI projects. From the critical need for transparency and accountability to the essential practices for mitigating biases and ensuring fairness, the discourse underscores that implementing responsible AI principles is a multifaceted endeavour requiring ongoing commitment. This commitment not only safeguards stakeholders' trust but also propels the technological advancement of AI in a direction that is ethically sound and socially beneficial.

Role of Auditing in Maintaining AI Integrity

The auditing process in AI systems is integral to ensuring compliance, accountability, and maintaining the trust of stakeholders. Auditing encompasses several key aspects including data auditing, algorithm auditing, and outcome auditing, each critical for verifying that AI systems function as intended and adhere to regulatory standards. Best practices in AI auditing involve establishing clear audit criteria, employing independent auditors, and engaging in continuous improvement to address any identified issues.

Challenges such as system complexity and the dynamic nature of algorithms require sophisticated strategies and specialised knowledge. Auditors must be equipped with tools to understand and dissect complex machine learning models, natural language processing, and computer vision technologies. Regular re-evaluation and real-time monitoring techniques are essential to manage the dynamic algorithms that update themselves based on new data.

Moreover, the integration of AI in audit processes, such as leveraging AI, cloud solutions, and data analytics, optimises audit steps by automating data collection, analysis, and reporting, thereby enhancing the efficiency and accuracy of audits. Predictive analytics play a pivotal role in risk assessment during audits by analysing vast datasets to identify potential risks and anomalies, ensuring comprehensive coverage and evidence-based conclusions.

AI's role in continuous monitoring of financial transactions and activities offers real-time insights, enabling auditors to promptly identify and address unusual activities, thus mitigating risks and preventing potential losses. This proactive approach is complemented by AI's capability to detect fraudulent activities through the analysis of transactional data and user behavior, further safeguarding the integrity of financial operations.

Initiative Spotlight: AI Verify and Project Moonshot

AI Verify is an initiative aimed at creating standardised testing and verification processes for AI systems. It focuses on developing benchmarks and certification processes to ensure that AI models meet high standards of performance and reliability.

Project Moonshot, another significant initiative, aims to develop advanced tools and methodologies for AI auditing. This project seeks to enhance the capabilities of auditors in assessing AI systems, ensuring that they are robust, fair, and transparent.

Through synergistic efforts, these stakeholders can share knowledge and expertise, develop common frameworks and standards, and ensure that AI systems uphold high ethical and performance standards. This collaboration can also spur innovation and drive the responsible development and deployment of AI systems. By emphasising transparency, accountability, and the development of robust testing and auditing frameworks, organisations can foster trust in their AI systems and pave the way for their widespread adoption. Through industry-wide collaboration and a steadfast commitment to ethical practices, the AI community can collaborate to ensure that AI systems serve as dependable tools that benefit society as a whole.

Conclusion

Building trust in AI systems is a multifaceted challenge that requires a concerted effort to enhance transparency, accountability, and robustness. Frameworks such as the ASEAN Guide on AI Governance and Ethics, Singapore PDPC AI Governance Framework, IMDA GenAI Model Governance Framework, AI Verify, and Project Moonshot provide valuable guidance and best practices for achieving these goals.

Addressing fairness, equity, and inclusion in AI is an active area of research. It requires a holistic approach, from fostering an inclusive workforce that embodies critical and diverse knowledge, to seeking input from communities early in the research and development process to develop an understanding of societal contexts. This includes assessing training datasets for potential sources of unfair bias, training models to remove or correct problematic biases, evaluating models for disparities in performance, and continuing adversarial testing of final AI systems for unfair outcomes. Interpretability and accountability is an area of ongoing research and development at various institutes of higher learning, public-private organisations, and in the broader AI community.

As we reflect on the significance of these practices, it is clear that the journey toward ethical AI deployment is continuous, highlighting the importance of adaptable frameworks and the collective responsibility of developers, regulators, and users alike. By embracing ethics as a cornerstone of AI development, the tech community can navigate the complex challenges that accompany AI's integration into everyday life. This strategic approach not only addresses the current demands for accountability and integrity in AI systems but also lays the groundwork for fostering a future where AI technologies contribute positively to societal progress and human well-being.

Article by: Cecil Su, Cybersecurity

References

- ASEAN Guide on AI Governance and Ethics: https://asean.org/book/asean-guide-on-ai-governance-and-ethics/

- Artificial Intelligence Accountability Policy: https://www.ntia.gov/issues/artificial-intelligence/ai-accountability-policy-report/overview

- AI Risks and Trustworthiness: https://airc.nist.gov/AI_RMF_Knowledge_Base/AI_RMF/Foundational_Information/3-sec-characteristics

- AI Auditing 101: Compliance and Accountability in AI Systems: https://www.zendata.dev/post/ai-auditing-101-compliance-and-accountability-in-ai-systems

- How AI Is Helping in Audit Automation: https://www.highradius.com/resources/Blog/leveraging-ai-in-accounting-audit-1/

- The argument for holistic AI audits: https://oecd.ai/en/wonk/holistic-ai-audits

- Singapore’s Approach to AI Governance: https://www.pdpc.gov.sg/help-and-resources/2020/01/model-ai-governance-framework

- Project Moonshot, powered by AI Verify, and AI Collaborations: https://www.imda.gov.sg/resources/press-releases-factsheets-and-speeches/factsheets/2024/project-moonshot

- Algorithms and the Auditor: https://www.isaca.org/resources/isaca-journal/issues/2021/volume-6/algorithms-and-the-auditor